What is computation?

What is computation? Most would say it has something to do with the internet, with adding up numbers, with AI image manipulation. They might think it was something invented by the military to crack codes, or by tech companies to commodify our data.

In fact, computing got its start in the textile industry.

In this post, I hope to give you some understanding of the way that computation refers to an abstract structure that can be instantiated in a very wide variety of physical media (looms, silicon chips, water wheels, brains, social relationships, etc.). It refers to the behavior of physical processes evolving according to a specified rule over time.

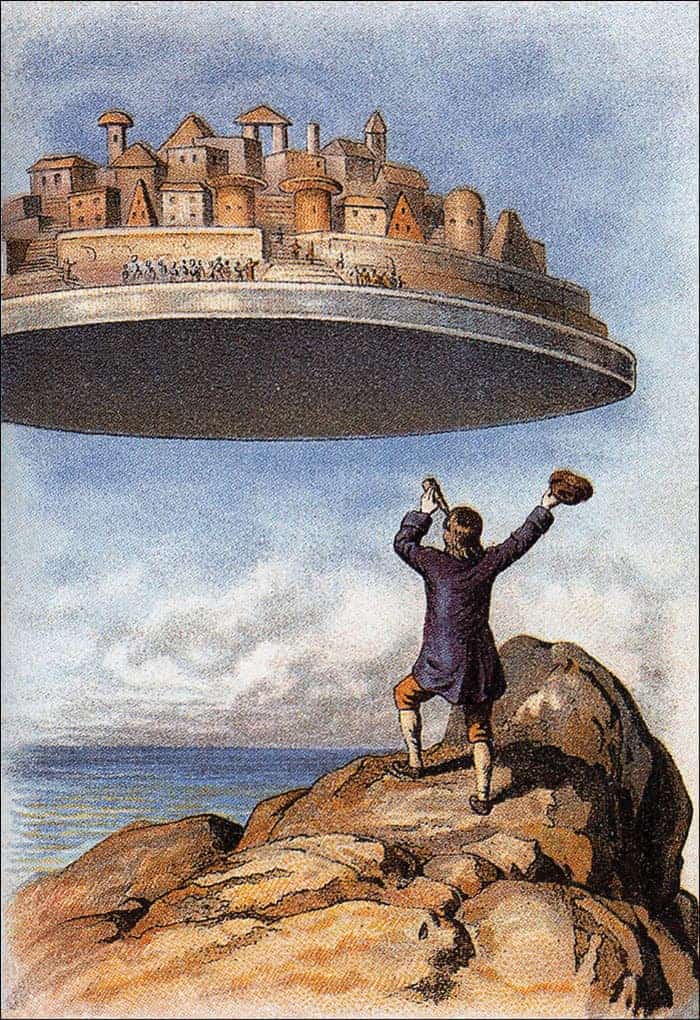

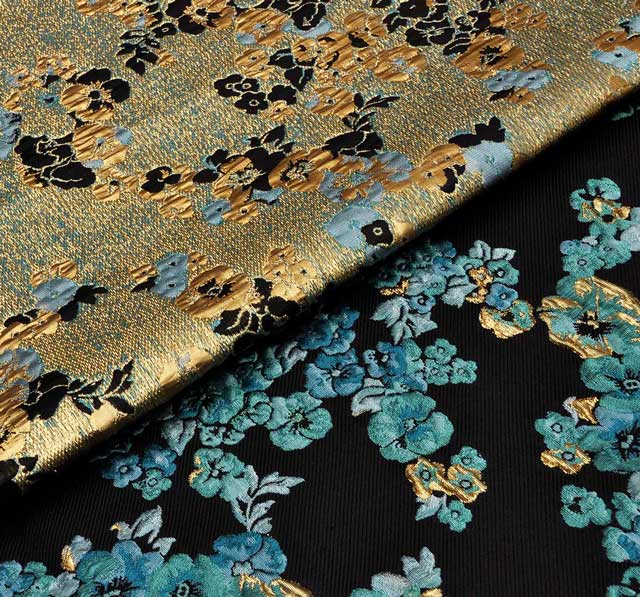

In 1803, weaver Joseph-Marie Jacquard created a device – called the Jacquard loom – capable of producing very fancy fabrics of essentially any desired weave-pattern. The loom can control every warp thread independently and the weaver “programs” the motion of the warp threads using punch cards.

This made it possible to weave intricate designs into the fabric itself. It was just a matter of punching the correct patterns into the input cards.

Jacquard looms were not fully general computers (whatever that might mean) but they demonstrated one of the fundamental ideas underlying computing: that a fixed mechanical apparatus could exhibit a very wide range of behaviors through the variation of an easy-to-modify “input component” (i.e., the punch-cards).

The analytical engine

This idea got picked up by Charles Babbage, who designed a device (never built) which we can now recognize as a general-purpose computer: the analytical engine. The device performed calculations on numbers. It was mechanical and controlled by punch-cards, directly inspired by the Jacquard loom.

Ada Lovelace, the only legitimate child of Lord Byron and a friend and collaborator of Babbage, wrote the first published computer algorithm: a procedure for the analytical engine which could calculate a useful series of numbers called the Bernoulli numbers.

Lovelace also understood that the analytical engine had applications beyond the strictly numerical. She wrote that the analytical engine

might act upon other things besides number, were objects found whose mutual fundamental relations could be expressed by those of the abstract science of operations, and which should be also susceptible of adaptations to the action of the operating notation and mechanism of the engine…Supposing, for instance, that the fundamental relations of pitched sounds in the science of harmony and of musical composition were susceptible of such expression and adaptations, the engine might compose elaborate and scientific pieces of music of any degree of complexity or extent

She had an inkling that computation had to do with relationships and structure in general, not just numerical structure.

Mathematical algorithms

Let’s now change our focus to another thread in the history of computing: algorithms in mathematics.

The development of algorithms (or what were sometimes called “effective procedures”) has been a feature of human culture since at least the mathematical culture of the Babylonians in 2500 BC. Algorithms are mechanical procedures for solving mathematical problems – to be executed by humans, perhaps with the aid of recording devices.

Let’s take a very simple example – a procedure which given a number $n$, determines if it is divisible by 3. It will work as follows:

- If the number $n$ is 0, then it is manifestly divisible by 3 ($0 \cdot 3 = 3$), if it is $1$ or $2$, it is manifestly not divisible by 3. In either of these cases we have our answer.

- If the number is greater than $2$, subtract 3 from it and return to step 1. This is justified because $n$ is divisible by 3 exactly when $n - 3$ is divisible by 3.

Essentially the algorithm repeatedly subtracts 3 from the starting number, seeing what we eventually end up with. For example, if we start with 11, we will go through the sequence $11, 8, 5, 2$ at which point we will terminate with the answer that 11 is not divisible by 3. The algorithm can be seen as grouping the original number into groups of 3. If we end up with a remainder of something other than 0 at the end, then it is not divisible by 3. If we end up with a remainder of 0, then 3 went cleanly into our starting number so it is divisible by 3.

Clearly this algorithm could be generalized to one for divisibility by any number $k$, not just 3. We could turn it into an algorithm for actually computing the (floor of the) quotient $\frac{n}{k}$ by maintaining a counter (started at 0) and adding 1 to the counter every time we subtract 3.

In the case of dividing 11 by 3 for example, our sequence of (number, counter) pairs would go $(11, 0), (8, 1), (5, 2), (2, 3)$ which tells us that $\frac{11}{3}$ is 3 (the value of the counter) with a remainder of $2$. Or if you prefer, $3 \frac{2}{3}$ in the notation of grade school.

Hence we have an algorithm for answering the question of whether one number is divisible by another.

There are many algorithms for all sorts of mathematical problems. For example,

- sorting lists of numbers

- multiplying numbers which are written in base 10 (we learn one in grade school)

- finding approximate occurences of a string of letters in a text (how search bars in documents work)

- finding the shortest path between two points assuming travel along a network of roads with given lengths

- assigning doctors to residency programs based on the lists of preferences of both groups, in such a way that in the resulting assignment no one would want to swap places. (This is actually used to create internship assignments in the US)

A universal language

At some point, it occurred to people to ask if there was a universal language to ask mathematical questions in, and then a universal algorithm, which given a question in this universal language, would say whether the answer was yes or no.

Specifically, this was the idea of Leibniz with his characteristica universalis (the universal language) and calculus rationator (the universal algorithm for determining truth.)

Leibniz even fantasized this language could be applied to areas outside mathematics, writing in a 1706 letter

It is true that in the past I planned a new way of calculating suitable for matters which have nothing in common with mathematics, and if this kind of logic were put into practice, every reasoning, even probabilistic ones, would be like that of the mathematician: if need be, the lesser minds which had application and good will could, if not accompany the greatest minds, then at least follow them. For one could always say: let us calculate, and judge correctly through this, as much as the data and reason can provide us with the means for it. But I do not know if I will ever be in a position to carry out such a project, which requires more than one hand; and it even seems that mankind is still not mature enough to lay claim to the advantages which this method could provide.

What is an algorithm?

Unfortunately for Leibniz, in 1936, Alonzo Church and Alan Turing both proved that there was no “effective procedure” for determining whether a mathematical statement was true or false.

Each accomplished this by giving a mathematical definition for “effective procedure.” Turing’s was what is now called a “Turing machine” and Church’s was something called the lambda calculus. It turns out the two notions are mathematically equivalent. In other words, anything that was computable in Turing’s sense was also computable in Church’s, and vice-versa.

Both notions have been extremely important, but Turing has been given the credit for answering of the question of whether there is a mechanical method for determining mathematical truth.

Why? The answer is that Turing based his definition on an analysis of the human body and how it computes. After all, before computers, all algorithms were executed by human beings and some kind of writing or recording implement (paper, a clay tablet, an abacus, etc.). This gave his argument an intuitive force that Church’s lacked.

Turing shatters the dream

The thing that really made Turing’s argument work was that he gave a precise mathematical definition of algorithm which was able to capture every known algorithm humanity had ever developed, and which so intuitively captured the human capacity for algorithmic behavior that mathematicians agreed it would also capture any future algorithms discovered.

We will not go through Turing’s argument, but we will look at his definition, since it illuminates the nature of computation and its relationship to human behavior.

Here is his definition. It will be inscrutable: don’t worry, we’ll go through and justify each component of it by reference to how human beings compute. A Turing machine (i.e., his model of “algorithm”) is

- A finite set $S$, called the set of “states”.

- A state $s_0 \in S$ called the “initial state”.

- A state $s_f \in S$ called the “final state”.

- A finite set $A$, called the “alphabet” or the set of “symbols”.

- A function $\delta \colon S \times A \to S \times A \times \{1, -1\}$, called the “transition function”. To spell it out, $\delta$ is a function which given a state and a symbol, outputs another state, another symbol, and either plus or minus 1. Because $S$ and $A$ are finite, we could write down a finite “lookup table” that shows the output of $\delta$ for any possible input.

So far at the very least we can see a Turing machine is specified by finitely much data (it would not be good if an algorithm required infinitely much information to specify!)

How did Turing arrive at this definition? He reasoned as follows:

Any algorithm can be performed by a mathematician. A mathematician performs an algorithm by working on sheets of paper. We imagine these sheets of paper laid out neatly on a desk in front of her, which extends infinitely to her right, so that she has as much paper as she wants. The input to the problem will be written on some of these sheets of paper, the rest will start out blank.

For example, with our divisibility algorithm, one of the papers may start out with $11$ and $3$ written on it (if our goal is to divide 11 by 3).

Let’s examine the paper. There are only finitely many distinguishable things that can be written on a piece of paper. This is because the eye has a finite resolution. At a certain point “adding more pixels” to an image corresponding to the paper would be meaningless since the eye cannot perceive them. Therefore, we may say there is a finite set $A$ corresponding to the possible states of writing on a sheet of paper.

Let’s examine the mathematician herself. Her behavior is determined by the state of her body. Turing argues again that the sum total of her behavior may be summarized by a finite set of states. His reason is that if “we admitted an infinity of states of mind, some of them will be ‘arbitrarily close’ and will be confused.”1 These states correspond to the set $S$.

This is probably the shakiest part of the argument when it comes to “human nature” but I think there is good reason to accept it at least as far as algorithmic behavior is concerned. An algorithm is a finite thing, and only concerns compliance with prescribed behavior in a finite set of circumstances. Given that, we can group mental states into clusters that are close enough from the point of view of the behavior they generate in those finitely many circumstances.

Now Turing is ready to finish his argument. In executing an algorithm, at each moment, the mathematician looks at the paper in front of her, decides to write something new on the paper (or not), and then moves to the left or the right to a new piece of paper. Her mind also goes into a new state. What she writes on the paper, whether she goes left or right, and what new state her mind goes in, depends only on her current mental state and what is written on the paper in front of her. Therefore, her behavior is determined by a function $\delta \colon S \times A \to S \times A \times \{ -1, 1 \}$ where $-1$ means go left and $1$ means go right.

Her mind starts in state $s_0$ and she computes simply by iterating this function until she is satisfied (that is, until her mind goes into the state $s_f$). The result of the computation is what remains written on the papers.

What is the point of this?

Turing’s definition has been incredibly successful. Every algorithm discovered since, and every new notion of computation, has indeed already been covered by Turing’s definition. Moreover, it has allowed the investigation into the properties of algorithms in general. This is what is called theoretical computer science.

It may seem that this investigation is pointless beyond the resolution of a mathematical puzzle like the question of the existence of the calculus rationator.

I think there are valid criticisms that can be made of the development of computer technology (to say nothing of the shape of its development under capitalism…) but, given that this text was delivered through the internet and rendered by a computer on your screen shows clearly these ideas have had a practical impact. None of that development would have been possible without the general investigation initiated by Turing.

Probably the single most important result has been the development of general purpose CPUs – that is, fixed physical devices capable of performing any computation. But from my perspective as a cryptographer, one of the greatest practical fruits of this investigation has been cryptography: the study of computation and communication in the presence of adversarial behavior. For example, we have developed encryption: a method for encoding secret messages in such a way that no algorithm can decode them unless they possess a secret key.2 Such technology is only possible in light of the analysis of algorithms in general.

This is a dramatic improvement on the state of things as late as World War 2, when it was assumed that any method for encrypting information could be broken given enough time and enough observations of encrypted messages.

The Church–Turing thesis

The conjecture that the Turing machine will capture every notion of effective computation we come up with in the future is called the Church–Turing thesis. To state it fully (per Wikipedia): “a function on the natural numbers can be calculated by an effective method if and only if it is computable by a Turing machine”.

This conjecture is supported by the fact that every new notion of computation developed since has been proven to be simulable by Turing machines.

Some have taken this conjecture even farther, supposing an “effective method” to be a “physically realizable method”. This is captured in the “physical Church–Turing thesis”: that any function which can be computed by a physically realizable method is computable by a Turing machine. If you think about this, this implies that the evolution of any physical system can be approximated by a Turing machine. One has to sift through paragraphs of grandiose claims, but Wolfram’s writings have useful articulations and applications of this point of view, and many interesting conjectures about physics resulting from computational considerations.

Therefore, computation in the Turing sense is a kind of generalized model for the evolution of physical systems. This would really not be that surprising: a Turing machine is an abstraction that models any process that evolves locally (in space) over time via a deterministic role. The one hitch is the finiteness of the state space and symbol space, but this is not such a big deal given the general assumption of continuity for physical systems and the relationship between continuity and finiteness. Cellular automata probably provide a more convincing analogy with physics (since the same local rule is applied everywhere in space instead of just at the tape head), but they are equivalent in computational power to Turing machines. However, one should not take any model of computation too seriously: the physical world will not in our lifetimes be completely characterized by a simple computational model, so we should only rely on the computational point-of-view to make general conclusions, rather than ones that depend on the fine-details of a model.

-

Alan Turing, On Computable Numbers, With an Application to the Entscheidungsproblem, p. 250. ↩︎

-

Cryptography in an absolute sense actually requires certain assumptions about computation that we have yet to prove, although many believe they are true. Its conditional results are still useful and rely on the understanding of computation in general which Turing gave us. ↩︎